In our previous blog (https://digitalenvironment.org/the-nvidia-jetson-nano-first-look-and-setup) we explored setting up and running the amazing Jetson Nano Developer Kit from NVIDIA. This little unit is capable of about half a teraflop of floating point calculations using its Maxwell accelerated GPU processor – pretty impressive for a low cost unit. In this blog we were following the ‘Two Days to a Demo‘ Hello AI World exercises. This is an excellent and well-written course that takes the user through the various tasks of AI machine vision, with tutorials, examples and helpful ‘how to’ videos. The initial tasks allow the use of object detection and image classification tasks using pre-trained models.

The first task is to fire up a Docker Container within the Nano environment. One can build natively the NVIDIA JetPack libraries and tools on the Nano, but for learning purposes it is far more convenient to use the pre-prepared Docker images with all the files and folder structures already in place.

Downloading and running the Docker is straight forward, described at https://github.com/dusty-nv/jetson-inference/blob/master/docs/aux-docker.md. A build script is provided from the git repo which is run to collect all the components of the docker image. The first time that this is run this takes a while as all the parts are downloaded. Then, after this starting up the docker is very fast.

$ git clone --recursive https://github.com/dusty-nv/jetson-inference $ cd jetson-inference $ docker/run.sh

Within the docker image, there are various tools NVIDIA have provided to get one started. There is an object detection tool (in Python and C++) ‘detectnet’, and a similar object classifier ‘imagenet’. Both are configured to use a default pre-trained model, namely ‘googlenet‘ for the imagenet tool, and ‘ssd-mobilenet-v2‘ for the detectnet tool.

A tool is provided to enable other pre-trained tools to be downloaded and run, a full list of the models available is at https://github.com/dusty-nv/jetson-inference#pre-trained-models. We decided to introduce an image of our own – of ‘Torba‘, our crazy, eastern-European sheepdog of unknown lineage, to see what the classification made of him. First off we tried the DetectNet object identification, but using the ‘DetectNet-COCO-Dog‘ trained library. This library correctly identified a canine object in the image – but with a confidence of only 50.2%.

Next, we decided to try the image classification. First accepting the default ‘googlenet‘ pre-trained library. This time we obtained a proportional probabilistic classification of the dog. Well, it worked – and classified by majority the dog as a Briard. Well that doesn’t sound right – but there are other breeds there also, all identified with probabilities.

The exercise was then repeated, but this time we first downloaded some of the other pre-trained models to try. A tool is provided by NVIDIA to select from a range of these, which can be downloaded into the Docker container. The models are copied into Docker Bind Mount points to allow them to be persistent between sessions – this is a neat feature of Docker and is something we covered in an earlier blog https://digitalenvironment.org/using-docker-with-bind-mount-points/. Below we are loading in the VGG-19 convolutional neural network (CNN) model.

We are now ready to try a few of the other models, to evaluate how well they perform. One thing NVIDIA note is that models do vary in complexity, and the more complex they are, the longer they take to run. This we can confirm – even with a GPU! The tools have a stage where, on first run, the models are read in and prepared for use. This process does take a while, but once complete the actual subsequent running of models is relatively fast. First we tried the ‘ResNet-18‘ model. This is a small model with just 18 layers, and shows it, classifying the dog as a Pekinese! Definitely not right! So much for that model…

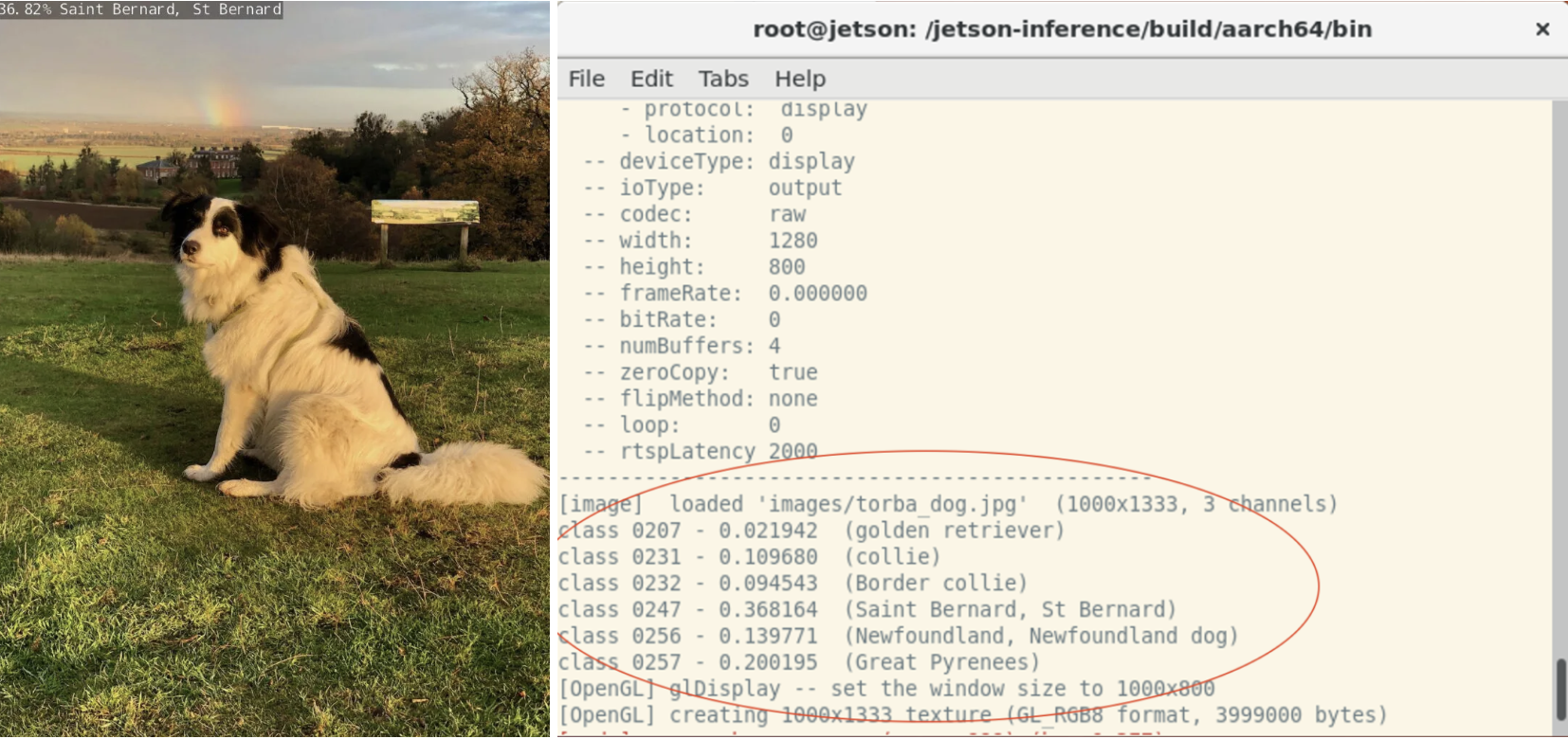

Next, we moved up to the ‘ResNet-50‘ model, a more complex model, with some better results. ResNet-50 has 50 layers, and identifies the dog as a majority Saint Bernard, with a bit of the French Great Pyrenees and some Collie. This seems better, but could it be improved?

Next we tried the ‘ResNet-152‘ complex model to see what improvements that offered. It seems to be fixing on Great Pyrenees but now with Collie and Border Collie mixed in. What other models are there we can try?

Next we tried the ‘AlexNet‘ model to see what that produced. Now it changes and the dog is back to a Saint Bernard again, but the French Great Pyrenees is there too.

Finally we tried the ‘VGG-19‘ model we showed being downloaded above. Again the French Great Pyrenees, now a Golden Retriever and still the Saint Bernard. The Collie is dropping back down the ranks.

So an interesting set of experiments to see what breed the different classifiers all arrived at – and bearing in mind these are pre-trained models involving hundreds and hundreds of photos used for training, the results were decidedly mixed, and not always in agreement. The object detection with ‘DetectNet-COCO-Dog‘ did locate a dog in the image, but only at 50.2%. The object classifiers also had mixed success – overall perhaps the ‘ResNet-152‘ model performed the most intuitively (and slowly) and ‘AlexNet‘ was not bad either, developed by Alex Krizhevsky.

Epilogue

The learning point from all these exercises is epitomised as the difference between interpolation and extrapolation. No matter how good the classifier models are, they are constrained by the training data they have to work with. We believe the dog actually to be a Tornjak, perhaps crossed with a smaller Collie type breed. This is a Bosnian, North Macedonian breed and quite simply there aren’t enough of these dogs in the USA, where the training libraries probably derive from, for the breed to have featured significantly in the training images used. Where big Molossus dogs, such as the Pyrenees and Saint Bernard have been included the similarity has been noted. This is all reminiscent of learnings from an earlier science project involving soundscape detection of calls of bats, using machine learning to determine the species making the ultrasonic calls recorded. The system faithfully identified a bat recorded in Bedfordshire, UK as a particular species. A bat expert consulted with this immediately noted that this species was not present in the UK, being a Southern European inhabitant – so couldn’t be right. Again, the selection of the training data used is key – and should be a major consideration in any machine learning project. Then again, perhaps it was a very, very rare bat that was blown north over the channel – and for that matter the dog does bark with a French accent and likes sheep! @torba_dog

The NVIDIA Jetson Nano – First Look and Setup – NERC Constructing a Digital Environment

December 23, 2021 at 2:40 pm[…] For an initial learning experience, we went over to the NVIDIA ‘Two Days to a Demo’ exercises at https://developer.nvidia.com/embedded/twodaystoademo#hello_ai_world. Here, one can either build the environments required from source line by line, or load and run docker images for each tutorial. For ease of use, the Docker approach is favoured for first time users! Further exploits are recorded in the following blog https://digitalenvironment.org/jetson-nano-object-detection-and-image-classification. […]